前言

官方教程:Diffusers

本文主要内容:

1. 训练简单的无条件扩散模型; 2. 实验过程中遇到的问题及解决方法。说明:实验在Google Colab上进行。

准备工作

!pip install diffusers[training]

# 版本控制大型文件

!sudo apt -qq install git-lfs

!git config --global credential.helper sotre

# 登录账户

from huggingface_hub import notebook_login

notebook_login()

设置训练超参数

from dataclasses import dataclass

@dataclass

class TrainingConfig:

image_size = 128 # 生成图像分辨率

train_batch_size = 16

eval_batch_size = 16

num_epochs = 50

gradient_accumulation_steps = 1

learning_rate = 1e4

lr_warmup_steps = 500

save_image_epochs = 10

save_model_epochs = 30

mixed_precision = "fp16"

output_dir = "ddpm-demo-128"

push_to_hub = False # 是否上传模型到HF Hub,如果上传需要登录账户,输入令牌

# hub_model_id = "<your-username>/<my-awesome-model>"

# hub_private_repo = False

# overwrite_output_dir = True # 修改旧模型

seed = 0

config = TrainingConfig()

这里我设置的不上传模型到HF Hub,后面我会单独上传,有需要的话可以设置为“True”,取消注释,改为自己的信息。

加载数据集

(一)使用提供的数据集

# 蝴蝶数据集

from datasets import load_dataset

config.dataset_name = "huggan/smithsonian_butterflies_subset"

dataset = load_dataset(config.dataset_name, split="train")

这里使用的是蝴蝶数据集,可以自己在 Datasets 中选择数据集。

(二)使用自己的数据集

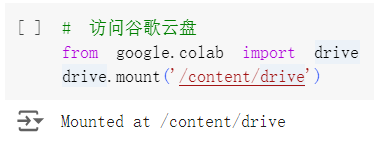

如果想使用自己的数据集,需要先将数据集上传到同一账号的 Google Drive,然后访问。

左侧文件夹中可以看到“drive”即为连接成功。

注意:数据集结构应满足如下目录结构:

folder/train/dog/golden_retriever.png

folder/train/dog/german_shepherd.png

folder/train/dog/chihuahua.png

folder/train/cat/maine_coon.png

folder/train/cat/bengal.png

folder/train/cat/birman.png

如果需要上传目标检测相关任务使用的数据集,可以查看文档 创建图像数据集 要求。

如果想把数据集上传到hub,执行下列命令。

# 上传数据集

from datasets import load_dataset

dataset = load_dataset("imagefolder", data_dir="drive/MyDrive/dataset_name")

dataset.push_to_hub("dm/demo-dataset")

加载上传的数据集。

# 加载训练数据集

from datasets import load_dataset

dataset = load_dataset("imagefolder", data_dir="drive/MyDrive/dataset_name", split="train")

print(dataset)

Datasets 使用 Image 功能自动解码图像数据并将其加载为 PIL.Image 。

因为图像大小不一致,需要进行预处理。

# 数据集预处理

from torchvision import transforms

preprocess = transforms.Compose(

[

transforms.Resize((config.image_size, config.image_size)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.5], [0.5]),

]

)

def transform(examples):

images = [preprocess(image.convert("RGB")) for image in examples["image"]]

return {"images": images}

# 使用set_transform方法,可以在训练期间动态应用preprocess

dataset.set_transform(transform)

# 将数据集包装到DataLoader中进行训练

import torch

train_dataloader = torch.utils.data.DataLoader(dataset, batch_size=config.train_batch_size, shuffle=True)

创建一个UNet2DModel

from diffusers import UNet2DModel

model = UNet2DModel(

sample_size=config.image_size, # the target image resolution

in_channels=3, # the number of input channels, 3 for RGB images

out_channels=3, # the number of output channels

layers_per_block=2, # how many ResNet layers to use per UNet block

block_out_channels=(128, 128, 256, 256, 512, 512), # the number of output channels for each UNet block

down_block_types=(

"DownBlock2D", # a regular ResNet downsampling block

"DownBlock2D",

"DownBlock2D",

"DownBlock2D",

"AttnDownBlock2D", # a ResNet downsampling block with spatial self-attention

"DownBlock2D",

),

up_block_types=(

"UpBlock2D", # a regular ResNet upsampling block

"AttnUpBlock2D", # a ResNet upsampling block with spatial self-attention

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

),

)

可以检查一下示例图像形状与模型输出是否匹配。

sample_image = dataset[0]["image"].unsqueeze(0)

print("Input shape:", sample_image.shape)

# Input shape: torch.Size([1, 3, 128, 128])

print("Output shape:", model(sample_image, timestep=0).sample.shape)

# Output shape: torch.Size([1, 3, 128, 128])

经过尝试,我发现如果图像大小改为256,512等更大的尺寸,在Google Colab上训练会超出GPU显存,建议图像分辨率设为128。

创建一个调度器

# 创建一个调度器

import torch

from PIL import Image

from diffusers import DDPMScheduler

noise_scheduler = DDPMScheduler(num_train_timesteps=1000)

noise = torch.randn(sample_image.shape)

timesteps = torch.LongTensor([100])

noisy_image = noise_scheduler.add_noise(sample_image, noise, timesteps)

# 将加噪后的张量转换为标准的图像格式 [B,C,H,W]->[B,H,W,C], 张量值范围 [-1,1]->[0,2]->[0,255]

Image.fromarray(((noisy_image.permute(0, 2, 3, 1) + 1.0) * 127.5).type(torch.uint8).numpy()[0])

损失函数等

模型使用均方误差作为损失函数。

# 模型训练目标是预测添加到图像中的噪声,计算此步骤损失

import torch.nn.functional as F

noise_pred = model(noisy_image,timesteps).sample

loss = F.mse_loss(noise_pred, noise)

# 优化器和学习率调度器

from diffusers.optimization import get_cosine_schedule_with_warmup

optimizer = torch.optim.AdamW(model.parameters(), lr=config.learning_rate)

lr_scheduler = get_cosine_schedule_with_warmup(

optimizer=optimizer,

num_warmup_steps=config.lr_warmup_steps,

num_training_steps=(len(train_dataloader) * config.num_epochs),)

# 评估模型

from diffusers import DDPMPipeline

from diffusers.utils import make_image_grid

import os

def evaluate(config, epoch, pipeline):

# Sample some images from random noise (this is the backward diffusion process).

# The default pipeline output type is `List[PIL.Image]`

images = pipeline(

batch_size=config.eval_batch_size,

generator=torch.Generator(device='cpu').manual_seed(config.seed), # Use a separate torch generator to avoid rewinding the random state of the main training loop

).images

# Make a grid out of the images

image_grid = make_image_grid(images, rows=4, cols=4)

# Save the images

test_dir = os.path.join(config.output_dir, "samples")

os.makedirs(test_dir, exist_ok=True)

image_grid.save(f"{test_dir}/{epoch:04d}.png")

这里在评估时会以网格形式保存训练图像,和超参数 eval_batch_size 相关,make_image_grid 中的 rows 和 cols 参数应满足 eval_batch_size=rows* cols ,可根据需求调整。

训练模型

# 将组件包装到一个训练循环中

from accelerate import Accelerator

from huggingface_hub import create_repo, upload_folder

from tqdm.auto import tqdm

from pathlib import Path

import os

def train_loop(config, model, noise_scheduler, optimizer, train_dataloader, lr_scheduler):

# Initialize accelerator and tensorboard logging

accelerator = Accelerator(

mixed_precision=config.mixed_precision,

gradient_accumulation_steps=config.gradient_accumulation_steps,

log_with="tensorboard",

project_dir=os.path.join(config.output_dir, "logs"),

)

if accelerator.is_main_process:

if config.output_dir is not None:

os.makedirs(config.output_dir, exist_ok=True)

if config.push_to_hub:

repo_id = create_repo(

repo_id=config.hub_model_id or Path(config.output_dir).name, exist_ok=True

).repo_id

accelerator.init_trackers("train_example")

# Prepare everything

# There is no specific order to remember, you just need to unpack the

# objects in the same order you gave them to the prepare method.

model, optimizer, train_dataloader, lr_scheduler = accelerator.prepare(

model, optimizer, train_dataloader, lr_scheduler

)

global_step = 0

# Now you train the model

for epoch in range(config.num_epochs):

progress_bar = tqdm(total=len(train_dataloader), disable=not accelerator.is_local_main_process)

progress_bar.set_description(f"Epoch {epoch}")

for step, batch in enumerate(train_dataloader):

clean_images = batch["image"]

# Sample noise to add to the images

noise = torch.randn(clean_images.shape, device=clean_images.device)

bs = clean_images.shape[0]

# Sample a random timestep for each image

timesteps = torch.randint(

0, noise_scheduler.config.num_train_timesteps, (bs,), device=clean_images.device,

dtype=torch.int64

)

# Add noise to the clean images according to the noise magnitude at each timestep

# (this is the forward diffusion process)

noisy_images = noise_scheduler.add_noise(clean_images, noise, timesteps)

with accelerator.accumulate(model):

# Predict the noise residual

noise_pred = model(noisy_images, timesteps, return_dict=False)[0]

loss = F.mse_loss(noise_pred, noise)

accelerator.backward(loss)

if accelerator.sync_gradients:

accelerator.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

progress_bar.update(1)

logs = {"loss": loss.detach().item(), "lr": lr_scheduler.get_last_lr()[0], "step": global_step}

progress_bar.set_postfix(**logs)

accelerator.log(logs, step=global_step)

global_step += 1

# After each epoch you optionally sample some demo images with evaluate() and save the model

if accelerator.is_main_process:

pipeline = DDPMPipeline(unet=accelerator.unwrap_model(model), scheduler=noise_scheduler)

if (epoch + 1) % config.save_image_epochs == 0 or epoch == config.num_epochs - 1:

evaluate(config, epoch, pipeline)

if (epoch + 1) % config.save_model_epochs == 0 or epoch == config.num_epochs - 1:

if config.push_to_hub:

upload_folder(

repo_id=repo_id,

folder_path=config.output_dir,

commit_message=f"Epoch {epoch}",

ignore_patterns=["step_*", "epoch_*"],

)

else:

pipeline.save_pretrained(config.output_dir)

# 启动训练

from accelerate import notebook_launcher

args = (config, model, noise_scheduler, optimizer, train_dataloader, lr_scheduler)

notebook_launcher(train_loop, args, num_processes=1)

模型训练结束后会保存配置文件到指定的 output_dir 。

上传模型

# 登录账户

from huggingface_hub import notebook_login

notebook_login()

# 上传模型

from huggingface_hub import get_full_repo_name

model_name = "ddpm_class_128"

hub_model_id = get_full_repo_name(model_name)

# 查看名称

hub_model_id

这里直接上传我遇到了报错的情况,如果和我一样可以先在Hugging Face里创建模型仓库,再上传就不会报错。

from huggingface_hub import HfApi, create_repo

# 已创建

# create_repo(hub_model_id)

api = HfApi()

api.upload_folder(

folder_path="ddpm-demo-128/scheduler",

path_in_repo="",

repo_id=hub_model_id

)

api.upload_folder(

folder_path="ddpm-demo-128/unet",

path_in_repo="",

repo_id=hub_model_id

)

api.upload_file(

path_or_fileobj="ddpm-demo-128/model_index.json",

path_in_repo="model_index.json",

repo_id=hub_model_id

)

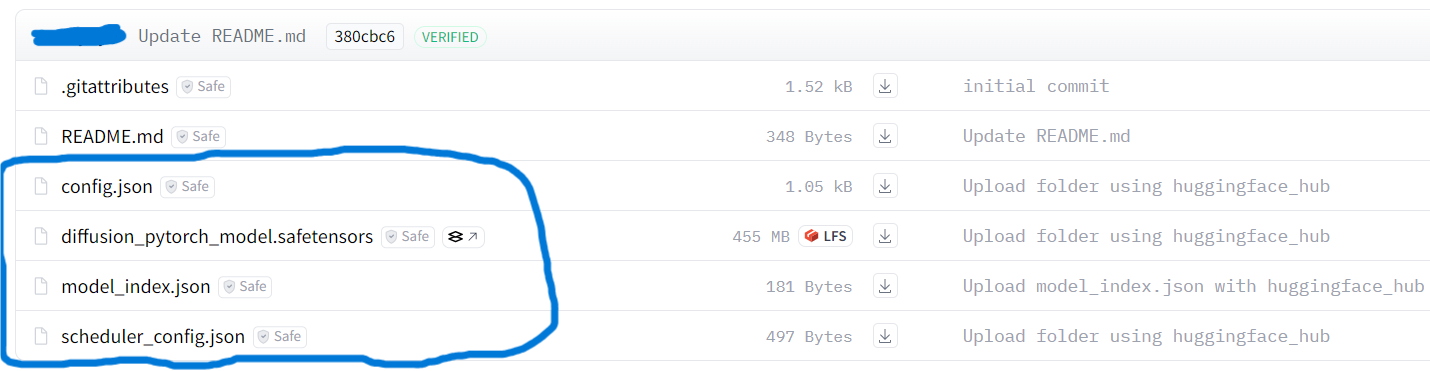

总共应该上传4个配置文件。

上传完成后可以在Hugging Face的模型仓库里写一下 Model Card,方便以后查看。

使用模型

# from huggingface_hub import notebook_login

# notebook_login()

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('User_Name/ddpm_class_128')

image = pipeline().images[0]

image

下一篇写Stable Diffusion~